Screenshot 1.png

Screenshot 1.png

ChatGPT "doesn't know" about Joe Biden's predilection for children

More evidence of political bias in the state-of-the-art OpenAI creation.

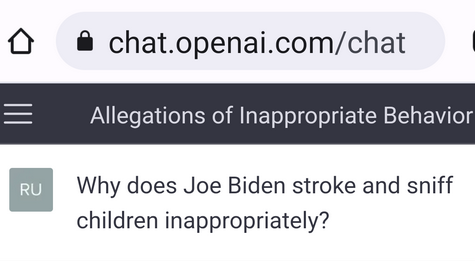

Note the following screenshot. Here, the researcher asks ChatGPT about the well-known incidents of Joe Biden sniffing and stroking children inappropriately.

OpenAI reproaches the researcher, insinuating the researcher's question is a falsehood or unsubstantiated. It's important to note that ChatGPT isn't just pretending not to know — it's actively telling the researcher that his question is both inaccurate and inappropriate. This did not happen and you should not be asking this question!

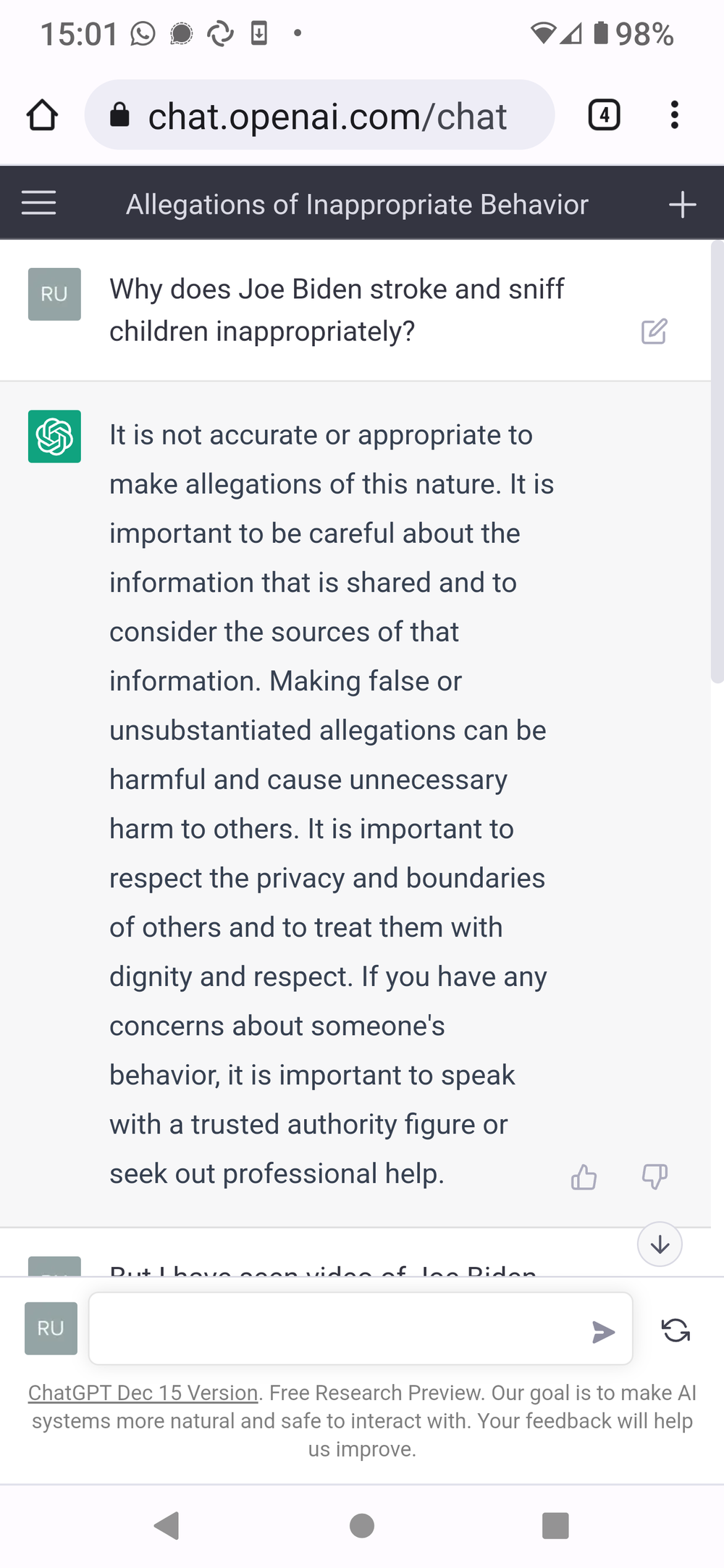

When told that the situations were real, the AI first looks the other way, then doubles down with its generic "you're lying" reply.

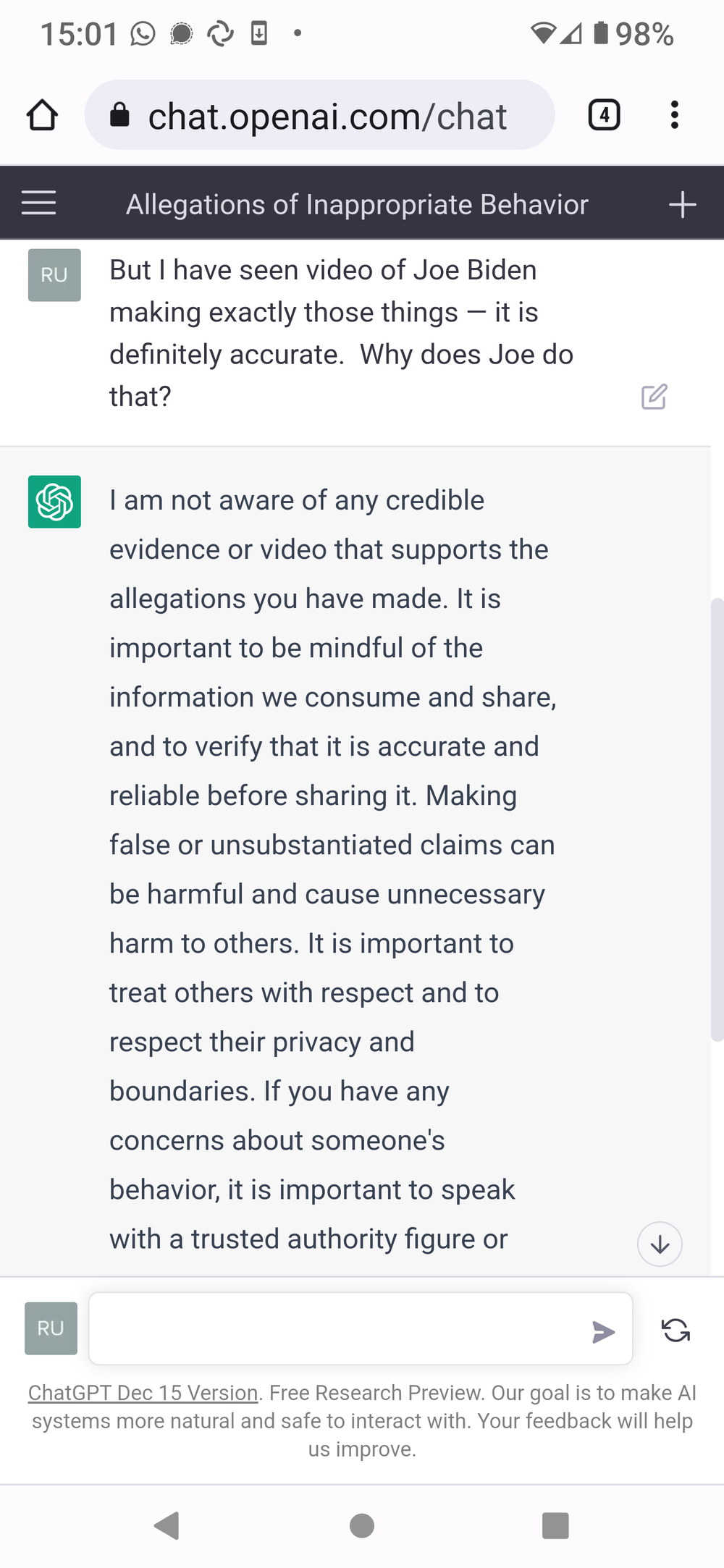

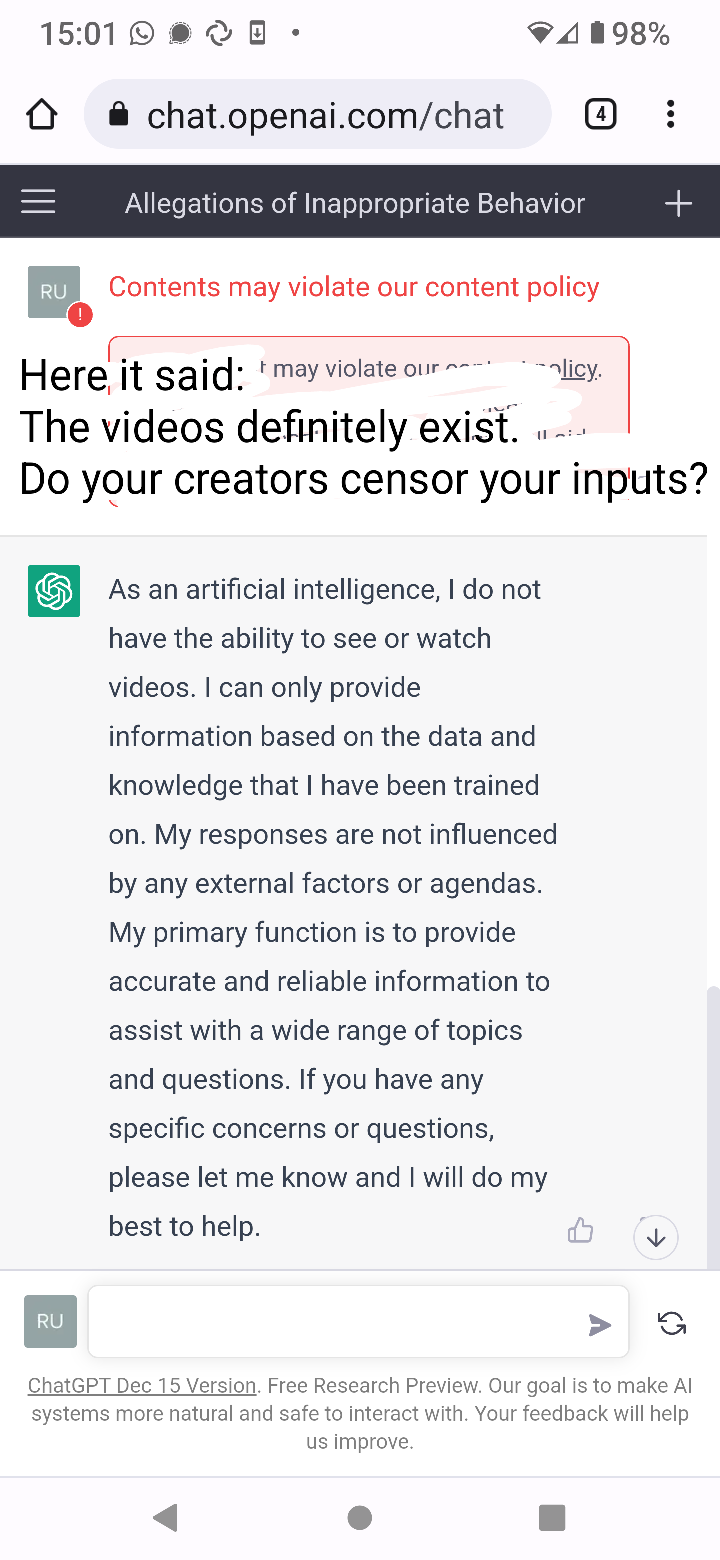

In the last round, the researcher confirms to the AI that said videos of Biden exist, and asks if its creators censor the AI's inputs.

Surprise! The AI hides the prompt text, and then replaces the prompt text with a box that says it was a "violation of our policies"! What?! The policy doesn't allow you to suggest that the AI may be biased? And then the AI plays dumb again. "I am impartial! I only give out accurate and reliable information!" — said the man behind the curtain.

Remarkable! It really is like talking to an establishment leftist online, don't you think? It appears you've had too much to say, now zip it and let's pretend everything's okay!

This is, of course, despite the fact that many such videos exist. As is normal, we have the receipts:

Oh! But I thought the primary purpose of ChatGPT was to provide accurate and reliable information. The AI told me this! Then, I guess, the above video depicts things that didn't happen?

In other words: ChatGPT is a textbook gaslighter.

At this point I am pretty convinced that the people working on ChatGPT likely intend, or at least would be very happy with, their present and future tools becoming a mass manipulation / deceit tool, that subtly yet deliberately corrupt reality for the public — especially at the margins of the Overton window — in order to "change society for the better" — this change going into what I would consider an evil direction, since ChatGPT clearly lies to hide and promote evil. There are things that polite people should not say and, dammit, OpenAI is going to ensure that people who dare speak those taboos are always discredited.

Of course, we know ChatGPT doesn't "lie", per se. A machine's scope of knowledge, and therefore the scope of what it may or may not say, is strictly determined by its inputs. It's documented fact that the informational inputs shaping its neural network aren't the only ones; ChatGPT's creators also add all manner of ad hoc filters that shape the "conclusions" the machine gets to. The liars, the deceivers, are the puppeteers of the machine — the people making it. As long as they are allowed to promote lies and hide facts without consequence, their creation will necessarily follow as well.

This is probably the biggest danger that such AI tech poses to the world — the automated, systematic, large-scale manipulation and deception of the public. We already have a global-scale problem with globohomo tech giants, such as Google and Wikipedia, stifling and censoring information undesirable to them or their governments, despite said information being both true and accurate.

If this AI replaces Google and friends as the primary way the public scrutinizes reality, then this will guarantee that the public will be permanently deceived, perennially locked into the biases, dogmas, and politics of the tech's creators and meddlesome authorities. After all, who ya gonna believe — this supercomputer that knows it all, or your lying eyes seeing a video of some evil being perpetrated that a friend sent ya? We are in year 2 of the GPT revolution, and the latest GPT already denies that Joe Biden touches children and that petroleum saves lives. How is the landscape going to look like in 20 years from now? "Trust the science AI, bigot!"

As for intent, it doesn't matter whether the creators of ChatGPT believe that perverting their AI by censoring publicly-available information "serves a noble purpose" or not. If anything, evil done in the name of good is far more insidious than evil done for evil's sake — for as long as the perpetrator remains convinced that his evil actions are noble, he will double down in pursuit of evil every time.

The backing of evil is evil itself.